Negative stereotypes and evidence-based policy

Cynicism has been creeping into debates over evidence-based policy. We've certainly noticed this in the last two PTP symposiums, with speakers urging a more realistic adoption of 'informed policy'. Below,Kathryn Oliver, Simon Innvær, Theo Lorenc, Jenny Woodman, andJames Thomas examine some of the on-going barriers to evidence-based (and even evidence-informed) policy. This post originally appeared on the LSE Impact Blog.

Despite several decades of work on evidence-based policy, the goals of improving research uptake and promoting greater use of research within policy making are still elusive. Academic interest in the area grew out of Evidence-Based Medicine, dating back to Archie Cochrane’s book Effectiveness and Efficiency: Random Reflections on Health Services published in 1972. To describe the current body of literature in the area, we carried out a systematic review, updating an earlier study of the reasons why policy-makers use (or don’t use) evidence. We found 145 papers from countries all around the world, covering a wide range of policy areas, from health to criminal justice, to transport, to environmental conservation. We have concluded our findings below in the hopes of reducing the barriers of evidence-based policy.

What are the major barriers to policymakers using evidence?

Despite these very different contexts, the barriers and facilitators of research were remarkably similar. Researchers, for example, found that poor access to research, dissemination and costs were still a problem. A dearth of clear, relevant and reliable research evidence, and difficulty finding and accessing it, were the main barriers to the use of research. Access to research, managerial and organisational resources to support finding and using research were all important factors.

Institutions and formal organisations themselves have an important role to play. For example, decision-making bodies often have formal processes by which to consult on and take decisions. Participation of organisations such as NICE can help research to be part of a decision, but many study participants saw the influence of vested interest groups and lobbyists as an obstacle to the use of evidence. The production of guidelines by professional organisations was also noted in the review as a possible facilitator of evidence use by policy makers; however, in cases where the organisation was itself regarded as not being influential (such as the WHO) this was not the case. Whether true or not, these perceptions can damage an organisation’s ability to change practice or policy. On the other hand, institutions can make a positive difference by providing clear leadership to champion evidence-based policy.

Evidence comes from people we know, not journals

Unsurprisingly, good relationships and collaborations between researchers and policymakers help research use. This is a common finding—we already knew that policymakers often prefer to get information and advice from friends and colleagues, rather than papers and journals. One consequence is that policymakers may take advice from academics they already know through university or school. In the UK, that tends to mean Oxbridge, LSE, UCL and Kings.

In our everyday lives, we seek advice from friends and colleagues; we don’t trust people who have been wrong before, or who seem to be biased – or with whom we just don’t get along. Exactly the same applies to the process of policymaking and, in our experience, in the process of research collaboration. Negative stereotypes abound on both sides, and our review found that personal experiences, judgments, and values were important factors in whether evidence was used.

Policymakers and researchers of course have very different pressures, working environments, demands, needs, career paths and merit structures. Their definitions of ‘evidence’, ‘use’ and ‘reliability’ are also likely to differ. Hence, they have very different perceptions of the value of research for policy-making.

However, while these differing perceptions are well documented, we know surprisingly little about what policy-makers actually do with research. This gap helps to perpetuate academics’ commonly held view that policymakers do not use evidence—a negative stereotype that, as any politician will tell you, is hardly conducive to trust or collaboration. As a result, we still do not understand how to improve research impact on policy – or, indeed, why we should try.

Do definitions matter?

Policymakers tend to have a broader definition of evidence than that usually accepted by academics. Academic researchers, understandably, tend to think of ‘evidence’ as academic research findings, while policy-makers often use and value other types of evidence. For example, over a third of the studies mentioned that use of informal evidence such as local data or tacit knowledge.

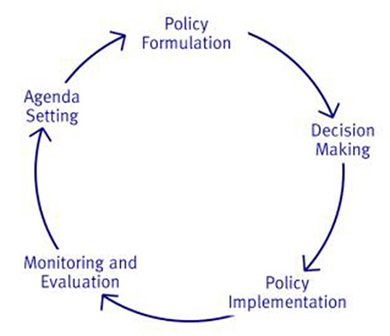

Other factors reported to facilitate use of evidence are timely access to good quality and relevant research evidence. However, ‘making policy’ and ‘using evidence’ are both really difficult to do and to understand. These are not simple, linear processes

Linear Stages of Policy Process from the Rational Framework. Adapted from Policy Analysis: A Political and Organizational Perspective by William Jenkins (1978, p. 17).

Figure 1: Examples of models of the policy process found in academia. Source: Wellcome Trust Educational Resources.

But nor are they repeatable, predictable cycles of events. The reality is likely to be far more complicated. The role of chance, e.g. who was available that day, or who you happened to have a conversation with, is hard to underestimate.

It is worth asking why most policymakers are not prioritising overcoming these barriers themselves. It is possible – even likely – that politicians do not want their pet policies undermined by evidence. However, we believe the research on this question—as well as much of the debate among policy-makers and commentators about research impact and evidence-based policy—is based on the three flawed assumptions that: 1) Policymakers do not use evidence 2) Policymakers should use more evidence and 3) If research evidence had more impact, policy would be better.

In fact, these assumptions tell us more about academics than about policy. Policy uses evidence all the time—just not necessarily research evidence. Academics used to giving 45-minute seminars do not always understand that a hard-pressed policymaker would prefer a 20-second phone call. Nor do researchers understand the types of legitimate evidence, such as public opinion, political feasibility and knowledge of local contexts, that are essential to understanding how policies happen.

There are a great many finger-wagging papers telling policymakers to ‘upskill’, but few are urging academics and researchers to learn about the policy process. What are the incentives for policymakers to try and engage with science and evidence, when it takes too long, is often poor quality, and they are told they are not skilled enough to understand it?

There are important unanswered questions relating to the use of research in policy. We don’t know how evidence actually impacts policy, or how policy-makers ‘use’ evidence—to create options? Defeat opponents? Strengthen bargaining positions? What is the role of interpersonal interaction in policy? Finally, does increasing research impact actually make for better policy, whatever this means? Academics have any number of stories about poor policies that ignore research, but less in the way of rigorous evidence showing the beneficial impact of research.

Other countries have tried creating formal organisations and spaces for relationships to flourish.In the USA, for an academic to leave a University and work at an organisation such as Brookings is seen as a promotion, not a failure. Australia’s Sax Institute provides a forum for policymakers and academics come together, similar to the ideas behind Cambridge’s Centre for Science and Policy.

It remains to be seen how influential these organisations will be, and whether they succeed in changing the stereotypes and behaviours of both policymakers and academics. And critically, whether relationships brokered in this way can transcend the traditional ‘expert advice’ model of research mobilisation to encompass evidence that aims to be an accurate view of the totality of current knowledge on a problem – like this systematic review.In the meantime, rigorous studies of the three common misconceptions about evidence-based policy will help us all understand how and whether we should get which evidence into policy. Solutions are better than excuses.

This blog has been previously published by Research Fortnight and the Alliance for Useful Evidence.

Featured Image credit: U.S. Department of State (Wikimedia, public domain)

Note: This article gives the views of the authors, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

Kathryn Oliver is a Provost Fellow in the Department of Science, Technology, Engineering and Public Policy (STEaPP) at UCL. She is interested in the debates about evidence-based policy, the role of policy networks, and ways of thinking about the impact of research on policy.

Simon Innvær is an associate professor at Oslo University College, Norway. He is interested in evidence based policy, and the combination of process and effect evaluations.

Theo Lorenc is Provost Fellow in the Department of Science, Technology, Engineering and Public Policy (STEaPP) at UCL. His research focuses on interactions between knowledge communities.

James Thomas is Assistant Director for Health & Wellbeing at the Institute of Education in London and Director of the EPPI-Centre’s Reviews Facility for the Department of Health, England, which undertakes systematic reviews across a range of policy areas to support the department. He specialises in developing methods for research synthesis, in particular for qualitative and mixed methods reviews and in using emerging information technologies such as text mining in research. Prof Thomas leads a module on synthesis and critical appraisal on the EPPI-Centre’s MSc in Research for Public Policy and Practice and development on the Centre’s in-house reviewing software, EPPI-Reviewer.